OPC UA

OPC UA is a common network protocol for reading and writing data from and to PLC's. It is supported by most PLC vendors.

OPC UA is a client/server protocol. The hopit Edge OPC UA Target is always the client and communicates with an external OPC UA server.

With the Namespace discovery feature, all available Signals can be discovered.

Configuration

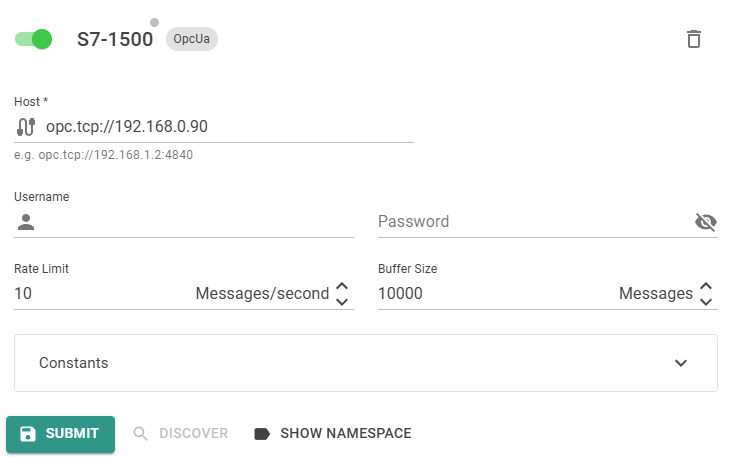

The OPC UA Target has the following parameters:

Host: Host name or IP Address of the IPC.UserName: Optional user name.Password: Optional password.TargetType: The type is alwaysOpcUafor this Target.

Corresponding Edge configuration and Device Twin definition to activate the OPC UA Target service:

- Edge-UI

- Device Twin

S7-1500 is in this example the Name of the Target. This can be any unique name.

{

"S7-1500": {

"Host": "opc.tcp://192.168.1.20:4840/",

"UserName": null,

"Password": null,

"TargetType": "OpcUa",

"MessagesPerSecondLimit": 1.0,

"BufferSize": 1,

"Constants": {},

"Enabled": true

}

}

The OPC UA Target automatically generates a self-signer certificate to enable message encryption. On connection, all available endpoints are read from the server. The discovered endpoints can be seen in the hopit Edge Log. The endpoint with the highest security level will be selected automatically. If the connection fails, it falls back to the next lower security level.

Supported security policies are:

Aes128_Sha256_RsaOaepBasic256Sha256Basic256Basic128Rsa15None

Data Streaming

The Signals to read or write are configured in the Router by adding the Node ID as signal name.

Signals must always be primitive data types. Supported are:

boolsbyteint16uint16int32uint32int64uint64floatdoublestringdatetimeguidbytestringxmlelementexpandednodeidstatuscodequalifiednamedecimal

Reading whole structures or arrays is not supported now.

Signals can be also read with Regex matcher. See the Router documentation for details.

Signal discovery is available for this Target. See the Introduction for more details.

For information how signals are named and labeled in Data Lakes, please read the Data Lake documentation.